This or that?

If you’re tired of making email marketing decisions based on hunches (or trying to get buy-in for your plans), then some email A/B testing might be in order. With a bit of foresight and planning, you can turn your gut feelings and ideas into actionable insights to share with the entire team.

Here’s what you need to know to get up to speed on email A/B testing:

What is email A/B testing?

First things first, what does it mean to A/B test your emails?

Email A/B testing, or split testing, is the process of creating two versions of the same email with one variable changed and then sending to two subsets of an audience to see which version performs best.

In other words: Email A/B testing pits two emails against each other to see which is superior. You can test elements that are big or small to gain insights that help you do things like:

- Update your email design.

- Learn about your audience’s preferences.

- Improve email performance.

10 email components you can test

If you take a moment to list out all of the decisions you make for every email—from design to timing and more—you’ll realize there are plenty of testing opportunities. If you create it, you can test it. To get you started, here are ten common email components to A/B test.

1. From name

One of the elements that informs subscribers about an email (from the outside) is the from name. While you can experiment with this if you want, make sure it’s always clear that it’s from your company. Don’t try anything too off-the-wall that could feel spammy. For example, Mailchimp uses a few From names including “Mailchimp,” “Jenn at Mailchimp,” and “Mailchimp Research.”

2. Subject line

If you want to increase open rates, the subject line is the most common place to start. You can experiment with different styles, lengths, tones, and positioning. For example, Emerson A/B tested two subject lines for a free trial email with a white paper:

- Control: Free Trial & Installation: Capture Energy Savings with Automated Steam Trap Monitoring

- Variable: [White Paper] The Impact of Failed Steam Traps on Process Plants

That particular test revealed a 23% higher open rate for the subject line referencing the white paper.

3. Preview text

While the subject line arguably leads the charge in enticing a subscriber to open an email, it isn’t the only option you have. Chase Dimond, an ecommerce email marketer and course creator, notes three main levers to get someone to open your email—the from name, subject line, and preview text.

He adds,

|

“Most people only focus on the Subject Line. And granted, it’s very important, but you should also highly consider A/B testing the from name and preview/preheader text.” |

4. HTML vs. plain text

If you mostly send either HTML or plain text emails, it might be worth seeing if the grass is greener on the other side. Here at Litmus, we A/B tested these two email styles across a few segments. Through a few tests, we found that the best messaging varies between customers and non-customers and that plain text emails have a firm place in our email lineup.

|

|

5. Length

In addition to the design of an email, you can play around with the length of the message. Here are a few questions to ask yourself:

- Do subscribers want more content and context in the message, or just enough to pique their interest?

- What length is ideal for different types of email? Or different devices?

- Do all segments prefer the same length of email?

6. Personalization

According to the 2020 State of Email report, the most popular way to personalize email is by including a name.

While this method can increase clicks, you can expand your personalization horizons. Jaina Mistry shared her experience with A/B testing name personalization,

|

“First name personalization in a subject line got more opens but lower conversions. The name caught people’s attention but not all of those people had the intention to take action after opening.” |

Other personalization factors you can use to base your A/B testing on include subscriber’s customer status, past interactions with your site, purchase history, and emails they’ve interacted with.

7. Automation timing

Most email A/B testing focuses on what goes in an email, but you can also test when to send it. For example, you could adjust how long after a person abandons their cart before you send them a reminder. Another A/B testing method to try is how many emails in a triggered sequence you send. Tyler Michael from Square shared that sending delay, timezone localization, and day of the week are all timing dimensions you can A/B test.

8. Copy

The tone and positioning of your email copy impact whether the message catches a reader’s interest or not. A/B testing in the “copy” category covers a ton of elements in your email, including:

- Body copy

- Headlines

- Button copy

9. Imagery

If you use stylized emails, try A/B testing your visuals. Do different hero images change engagement? Can you use animated GIFs in longer emails to increase read time? Does including an infographic in an email make people more likely to forward it? The possibilities are nearly endless.

10. Automated and transactional emails

The final email A/B testing factor on this list is more of a category and a reminder—don’t forget to A/B test all of your emails regularly. It’s a common mistake to only test broadcast emails, but automated and transactional emails deserve testing and improvement, too. Often, these emails are the ones doing the heavy lifting of subscriber engagement, so it’s crucial to test these always-on emails.

How to set up an email A/B test (the right way)

While email A/B testing is straightforward in theory, it can have a lot of moving parts. If you want to get accurate insights to share with your team, invest a little time planning and analyzing. Below are the steps you need to take to run a successful (and insightful) A/B test.

Choose an objective

As with many projects, you need to start your email A/B testing with the end goal in mind. Choose your hypothesis, what you want to learn, or what metric you want to improve.

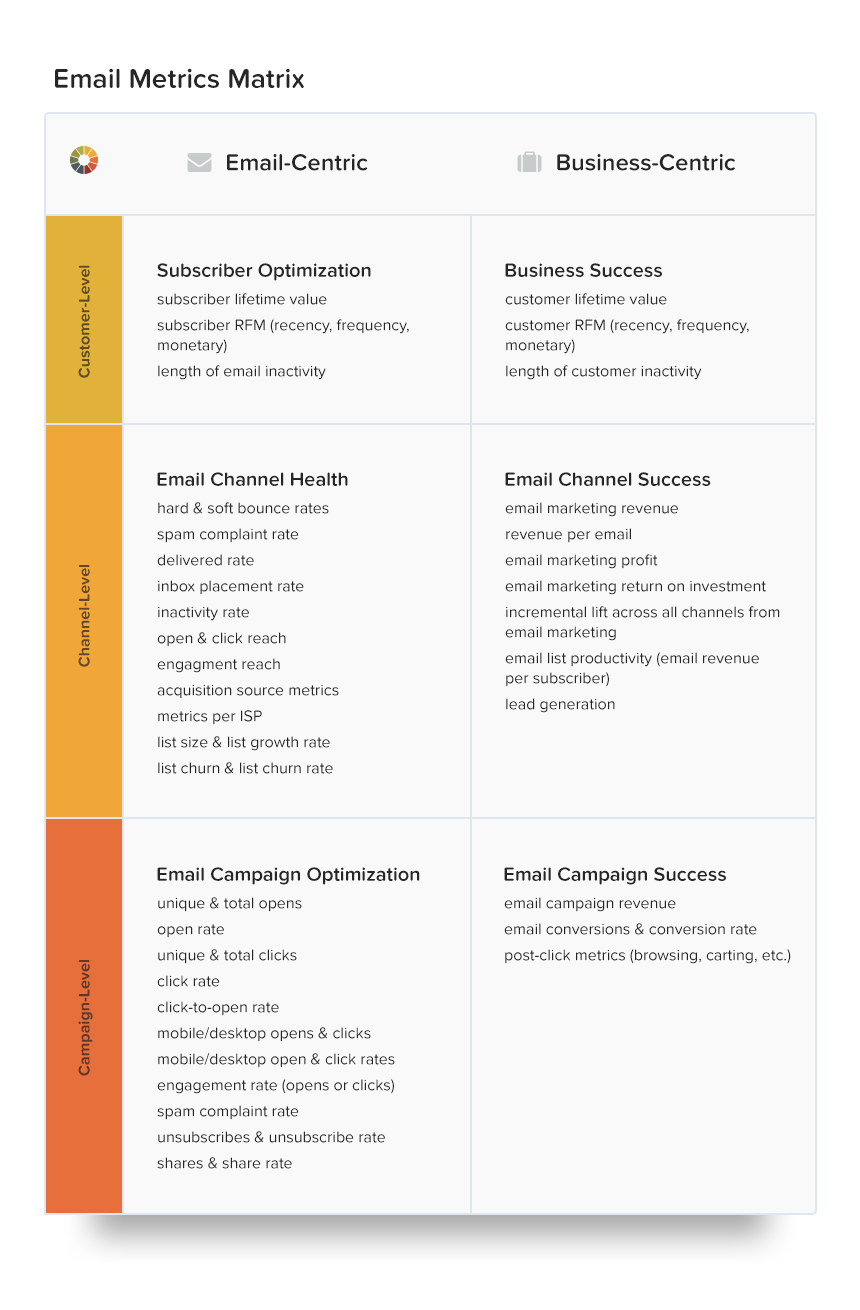

While you can use email A/B testing to improve campaign-level metrics like open rates, try to track the impact even further. For example, how does the conversion rate vary from the different emails? Since subject lines set expectations for the content, you might see their influence further than the inbox.

Pick the variable

Once you know what effect you want to have, it’s time to pick your variable component. Make sure you’re only testing one variable at a time. If there is more than one difference between your control and variable emails, you won’t know what change moved the needle. Isolating your email A/B tests may feel a bit slower, but you’ll be able to make informed conclusions.

Set up the parameters

The third step in your email A/B testing process has the most decisions. When you set up parameters, you decide on all of the pieces that ensure the test is organized. Your decisions include:

- How long you’ll run the test. You’ll probably be sitting on the edge of your seat waiting for results, but you need to wait up to a day for results to trickle in.

- Who will receive the test. If you want to A/B test within a particular segment, make sure you have a large enough audience for results to be statistically significant.

- Your testing split. Once you know which segments will receive the test, you have to decide how to split the send. You could do a 50/50 split where half gets the control, and the other receives the variable version. Or, you can send control version A to 20% and test version B to another 20%, then wait and send the winner to the remaining 60%.

- Which metrics you’ll measure. Figure out exactly which metrics you want and how to get the data before your test. How will you define success?

- Other confounding variables. Make a note of variables like holidays that could impact the test results but are out of your control.

Run the test

Email A/B testing is all about options, and that includes how you run the test. The two main ways to run the test are:

- Set up the A/B test in your ESP to run automatically. Letting your ESP manage the split-sending could be a little easier to manage and is a good option for simple tests with more surface-level goals like increasing open rates.

- Manually split the send. Setting up the two separate emails and manually sending the emails is more hands-on but can give you a cleaner look at data beyond your ESP (like website engagement). Use this method if you want to track results beyond the campaign.

Analyze the results

Once it’s time to analyze the results, you’ll be grateful for every moment spent carefully planning. Since you went in with a clear idea, you know what to look for once the test is over. Now you need to compare results and share them with your team.

Unless you want to test again. Listen, you don’t have to, but there is a chance that your variable email got a boost from the “shiny new factor.” If you want to confirm the first test results, you can run a similar test (with the same cohort!) to see if the learnings remain true.

Magan Le shared on Twitter,

|

“I did one that was all-image vs. live text. The all-image one got a higher open rate but a lower CTOR. My theory was that people were curious and turned images on to see it, but they realized they weren’t interested once they saw it. Whereas the live text folks were better primed.” |

Dig deeper into your email A/B tests with Litmus

All ESPs give you access to the same standard email metrics, and you can also tap into things like web analytics to understand behavior outside of the inbox. If you want to dig deeper into your A/B tests with advanced metrics, Litmus can help you do more.

Here are just a few use cases where Litmus Email Analytics could improve your insights:

- Compare the read rates between variations, not just open rates, to see which subject line version attracted the most engaged subscribers.

- Understand which content leads to the highest share rate so you can leverage learnings to grow your referral program.

- Analyze A/B test results between email clients and devices, particularly if what you’re testing doesn’t have broad email client support.

Once you have your advanced analytics reports and findings, Litmus makes it easy to share the results with your team. Then, you can optimize and drive strategies in email and the rest of your marketing channel mix.

|

Build, QA test, and analyze results in one place Empower your team to create better emails. And get everything you need to build, test, and perfect your way to higher-performing email campaigns—with Litmus. |

The post How to Do Email A/B Testing Right (+ 10 Easy Ideas to Start Now) appeared first on Litmus.

![]()